- #Ubuntu 16.04 install cuda how to

- #Ubuntu 16.04 install cuda driver

- #Ubuntu 16.04 install cuda software

#Ubuntu 16.04 install cuda software

As with other software that evolves, NVIDIA released CUDA 9.2 back in May. Test_1 | 11:02:46.500189: I tensorflow/core/common_runtime/gpu/gpu_:1402] Created TensorFlow device (/device:GPU:0 with 1624 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1050, pci bus id: 0000:01:00.0, compute capability: 6.In the previous post, we’ve proceeded with CUDA 9.1 installation on Ubuntu 16.04 LTS. It looks like this and appears at the end: Running this with docker-compose up you should see a line with GPU specs in it. "import tensorflow as tf tf.test.is_gpu_available(cuda_only=False, min_cuda_compute_capability=None)" # the lines below are here just to test that TF can see GPUs # You don't need it if you set 'nvidia' as the default runtime in # Make Docker create the container with NVIDIA Container Toolkit version: "2.3" # the only version where 'runtime' option is supported

All the container does is a test whether any of GPU devices available. Here's an example docker-compose.yml to start a container with tensorflow-gpu. CUDA is included in the container image, you don't need it on host machine. Once you've obtained these you can simply grab one of the official tensorflow images (if the built-in python version fit your needs), install pip packages and start working in minutes.

#Ubuntu 16.04 install cuda how to

Moreover I will greatly appreciate if someone point out to a guide on how to use conda enviroments to build docker files and how to run conda env in docker container. please confirm if I can run containers that each have a specific cuda versions. so If nvidia limits the usage in docker container to one cuda version, they only intended to allow developers to work on one project of special dependencies per operating system. when we need to work with multiple environments, and conda and virtual environment fail. usually if their is no need to use docker we avoid it to overcome additional complexities. If that is the case, NVIDIA messed up big time. I'm still not sure if I could run docker containers with a different cuda version than the one I installed in my pc.

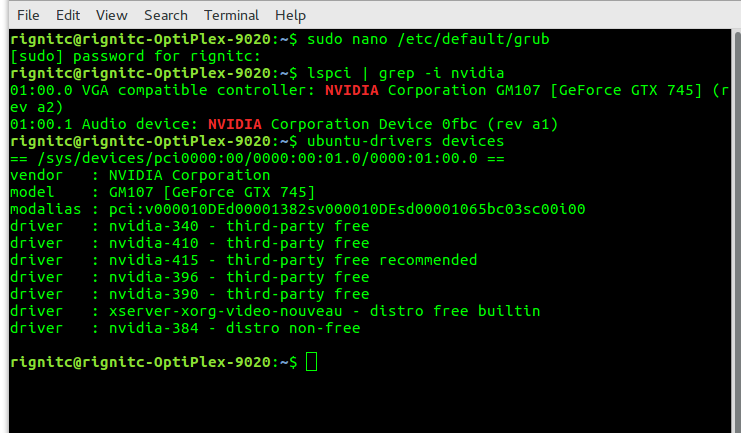

#Ubuntu 16.04 install cuda driver

apparently you can't access cuda via docker directly if you don't install the cuda driver on the host machine. I was trying to avoid using docker to avoid complexity,after I failed in installing and running multiple versions of cuda I turned to docker. I installed a new image of Ubuntu 16.04 and started to work on dockerfiles to create custom images for my projects. after a while I gave up and started to search for alternatives.Īpparently virtual machines can't access GPU, only if you own a specif gpu type you can run the official NVIDIA visualizer. I tried to use conda and virtual environments to solve that problem. I need to have multiple versions of cuda on my OS since I'm working on multiple projects each requires a different versions of cuda. I need to run multiple version of TensorFlow and each version requires a specific cuda version.

0 kommentar(er)

0 kommentar(er)